Gaurav Agarwal

Using NFS Persistent Volumes Across Pods in ReadWriteMany Mode

Mounting Google Filestore as an NFS share to multiple Pods

Network File Share or NFS is Block storage provided across a network. With the help of it, you can mount the same storage resources across multiple hosts. It is an excellent choice for storing and sharing information across various systems. It generally helps in situations where you want to have multiple instances of your application to serve in an HA mode.

From a performance point of view, traditionally, NFS is slower than SAN-based persistent disks. However, with managed services available in the cloud, this con is pretty much covered. For example, if we take GCP’s Filestore, it provides the following IOPs for different categories of file shares

Secure Your Kubernetes Cluster With AppArmor

A hands-on guide to enabling AppArmor for your container workloads

AppArmor is a standard Linux Security Module implementation that allows you to enforce fine-grained control over your Linux system, over and above the group and user-level permissions. So, it helps restrict your programs to only the limited set of resources, files, and other permissions it needs to work. In addition, it enables you to implement the Principle of Least Privilege within your container applications.

Most containers that are available in the market use base images that are standard Linux distributions. While using a distribution like Alpine as a base image can help reduce the attack surface to a large extent as it does not contain unnecessary package managers and other bloatware, we still need to restrict the container process to only do what it intends to do, i.e., it should only modify files, and run commands that it needs to — everything else should be denied.

Effective Discount Due to Cashback Is Less Than You Thought

Using Python and matplotlib to find the cashback coefficient

You might have wondered that when you get a 10% cashback on something, you get a 10% discount. That is how most businesses trick their customers into believing. Well, you’ll be surprised that, in reality, the effective discount due to cashback is lesser than what you thought. Let me explain how.

Assuming that you spend $100 every time you purchase something from the store, and you get a 10% cashback every time you shop as future credits, let’s look at how much money you actually save.

Secure Your Kubernetes Cluster With Seccomp

A hands-on guide to applying the principle of least-privilege on container’s syscalls

Kubernetes has been there for a while, and it has since been very popular with tech enthusiasts as well as serious businesses. While it seeks to improve the way we deploy and run applications, and it’s a quantum leap in itself, it is a relatively new technology taking steps to mature. A particular focus of Kubernetes has always been security, and there are multiple ways we can tackle it. One such method is by using Seccomp.

Seccomp stands for secure computing and is a standard Linux Kernel feature since version 2.6.12. …

Getting Started With Kustomize

Declarative management of Kubernetes resources with a hands-on example

Kubernetes is one of the well-known container orchestrators and has led to the development of an entire ecosystem around it. It has allowed organizations to manage their container applications with ease by providing several resources to manage container deployments, replicas, scaling, service discovery, and networking through a single API Interface.

Most organizations have multiple environments to develop and test these applications before deploying them into production. Configuration between these environments might differ, and there might be several aspects that you may want to tweak.

There are various ways to manage your Kubernetes resources for multiple environments, such as Helm and…

How To Set Up Argo CD With Terraform To Implement Pure GitOps

Declarative continuous deployment for your Kubernetes workloads

Argo CD is an extremely popular declarative, GitOps-based continuous delivery tool. It is an open source tool and part of the Cloud Native Computing Foundation (CNCF).

It is effortless to install and set up, and it offers various features and a jazzy UI to manage all your application requirements. In addition, the tool is Kubernetes-aware and helps you implement GitOps by continuously syncing your Kubernetes resource manifests from Git to your Kubernetes cluster.

Why Argo CD?

It allows teams to achieve GitOps, which has the following principles:

Git is the single source of truth.

Git is the single place to operate all environments…

A First Look at Google Kubernetes Engine Autopilot

The new serverless solution using the friendly Kubernetes API

We are at a historic juncture at the moment. Google has attempted to put a serverless solution behind a friendly Kubernetes API. It recently launched GKE Autopilot, which offers us a serverless option while running the popular managed Kubernetes solution.

So, instead of launching a GKE cluster with worker nodes within your Google Cloud environment, you can now offload all the management hassle to Google’s SREs and focus entirely on your application while using the friendly Kubernetes API.

That means you don’t have to work around anything within your applications and can use the serverless solution with ease. Plus, you…

Docker Container Security With Anchore Grype

Run a vulnerability scanner on your container images within CI/CD pipelines

With the advent of the cloud and container orchestrators, containers are becoming more commonplace. Docker is one of the most popular container runtimes that we use, and Docker images are everywhere. However, as it is a relatively new technology — and with the increased focus on shift-left — container security is a hot topic.

Most enterprises focus on runtime container security. However, sometimes the containers themselves have a vulnerability at build time that goes undetected to the untrained eye.

Containers use layers, and most containers are built from third-party base images that are available on Docker Hub. So, even if…

Redefine Your Cloud Journey With Terraform and Packer

Do Infrastructure and Config As Code the right way

For most DevOps professionals, creating a VM usually consists of spinning it up on a cloud using Terraform and then using a config management tool (e.g. Ansible or Puppet) or a bootstrap script (e.g. cloud-init) to convert the raw Virtual Machine to a purposeful server.

We all have been doing it for a long time and it works for most cases, but it comes with some drawbacks.

I will give you an example from personal experience. We have a horizontally scalable web server running on GCP using managed instance groups (MIG). …

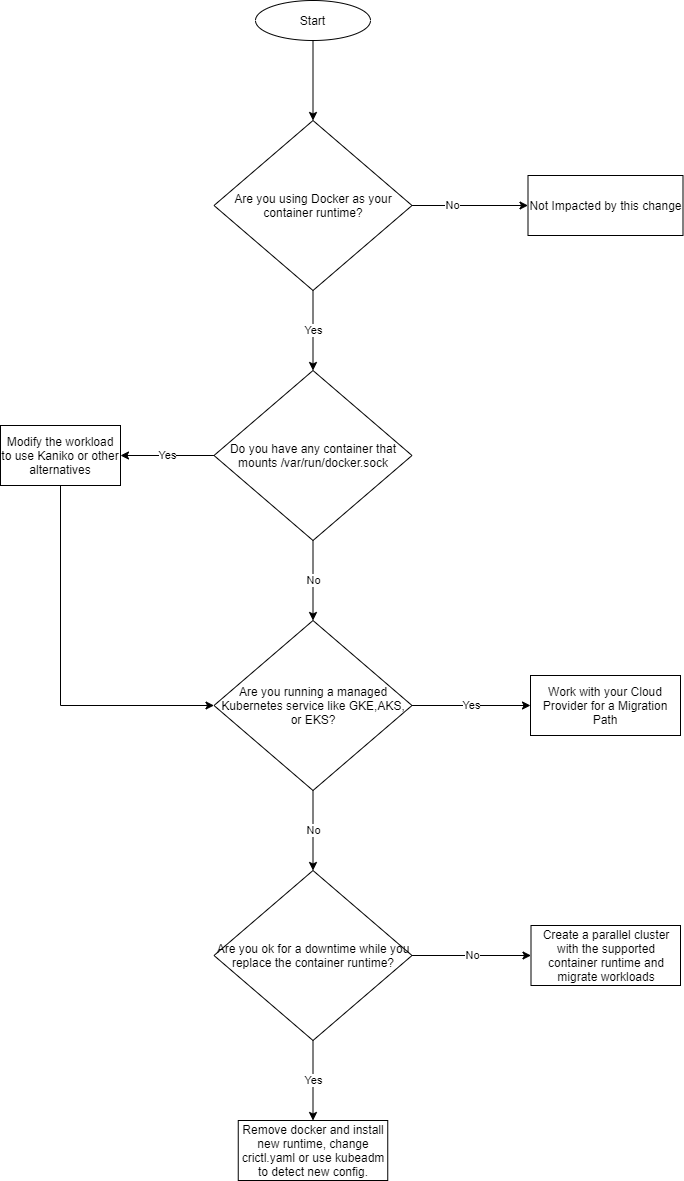

Kubernetes Is Deprecating Docker

What’s the change, who’s impacted, how does one migrate, and why it isn’t a reason to panic?

Like most of us have heard, Kubernetes is deprecating Docker as a runtime from v1.20 in favour of runtimes using the Container Runtime Interface (CRI), such as containerd and CRI-O.

It isn’t a reason to panic, though. First of all: it’s a deprecation — i.e., you’ll start getting a warning from v1.20, so you aren’t immediately impacted. You still have a full year to come up with a plan, as Docker will be unsupported at v1.22, which they’ll roll out in late 2021.

Even if you aren’t ready by that time, you can choose to not upgrade to v1.22 until such a time that you think you’re ready. …

Understanding Kubernetes Multi-Container Pod Patterns

A guide to Sidecar, Ambassador, and Adapter patterns with hands-on examples

A Kubernetes Pod is the basic building block of Kubernetes. Comprising of one or more containers, it is the smallest entity you can break Kubernetes architecture into.

When I was new to Kubernetes, I often wondered why they designed it so. I mean why containers did not become the basic build block instead. Well, a bit of doing things in the real environment and it makes more sense now.

So, Pods can contain multiple containers, for some excellent reasons — primarily, the fact that containers in a pod get scheduled in the same node in a multi-node cluster. …

Policy As Code on Kubernetes With Kyverno

Enforce Kubernetes best practices for your organisation with CRD

Kubernetes has been able to revolutionise the cloud-native ecosystem by allowing people to run distributed applications at scale. Though Kubernetes is a feature-rich and robust container orchestration platform, it does come with its own set of complexities. Managing Kubernetes at scale with multiple teams working on it is not easy, and ensuring that people do the right thing and do not cross their line is difficult to manage.

Kyverno is just the right tool for this. It is an open source, Kubernetes-native policy engine that helps you define policies using simple Kubernetes manifests. …

Kubernetes Security With Falco

Comprehensive runtime security for your containers with a hands-on demo

Falco is an open source runtime security tool that can help you to secure a variety of environments. Sysdig created it and it has been a CNCF project since 2018. Falco reads real-time Linux kernel logs, container logs, Kubernetes logs, etc. against a powerful rules engine to alert users of malicious behaviour.

It is particularly useful for container security — especially if you are using Kubernetes to run them — and it is now the de facto Kubernetes threat detection engine. It ingests Kubernetes API audit logs for runtime threat detection and to understand application behaviour.

It also helps teams understand who did what in the cluster, as it can integrate with Webhooks to raise alerts in a ticketing system or a collaboration engine like Slack. …

Understanding Kubernetes Deployment Strategies

Rolling updates, recreates, ramped rollouts, canary deployments, and more

Deployment resources within Kubernetes have simplified container deployments, and they are one of the most used Kubernetes resources. Deployments manage ReplicaSets, and they help create multiple deployment strategies by appropriately manipulating them to produce the desired effect.

Surprisingly, deployments only have two Strategy types: RollingUpdate and Recreate.

While RollingUpdate is the default strategy where Kubernetes creates a new ReplicaSet and starts scaling the new ReplicaSet up and simultaneously scaling the old ReplicaSet down, the Recreate strategy scales the old ReplicaSet to zero and creates a new one with the desired replicas immediately.

That does not limit Kubernetes’ ability, though, for more advanced deployments. There are more fine-grain controls on the deployment specification that can help us implement multiple deployment patterns and strategies. Let’s look at possible scenarios, when to use them, and how they look with hands-on examples. …

Accelerate Your CI/CD Pipelines With Kubernetes in Docker (KinD)

Understanding KinD with a hands-on example

Setting up a Kubernetes cluster is getting simpler with time. There are several turnkey solutions available in the market, and no one currently does it the hard way!

Notably, Minikube has been one of the go-to clusters for developers to get started with development and testing their containers quickly. However, the issue with Minikube is that it can only spin up a single node cluster.

Therefore, this becomes a limitation for integration and component testing, and most organisations rely on cloud-based managed Kubernetes services for that.

Integrating Kubernetes in the CI/CD pipeline and doing a test requires multiple tools, such as Terraform, a dependency on a cloud provider, and of course a CI/CD tool such as Jenkins, GitLab, or GitHub. …

Should You Get Kubernetes Certified?

An overview of CNCF’s certificate offerings and their benefits

Recently, Kubernetes has been in vogue and growing at a tremendous pace. With Kubernetes being part of CNCF and the industry taking a more cloud-native approach, Kubernetes engineers are in demand as never before.

The Cloud Native Computing Foundation, in collaboration with the Linux Foundation, has come up with certificate offerings that allow developers, system administrators, and cybersecurity personnel to validate their knowledge on Kubernetes. They have developed these certificates to match industry requirements and to ensure that every developer or system administrator has the knowledge to be called Kubernetes experts.

Unlike other tech certifications, the Kubernetes certifications offered by CNCF are open-book and completely hands-on. You get a Linux command line environment where you solve a number of labs in a limited period of time. They test you on the practical aspects of the technology rather than asking some multiple-choice questions that you can cram, regurgitate, and then completely forget about later. …

Understanding Vertical Pod Autoscaling in Kubernetes

And why you should not use it in auto mode

Vertical Pod Autoscaling is one of those cool Kubernetes features that are not used enough — and for good reason. Kubernetes was built for horizontal scaling and, at least initially, it didn’t seem a great idea to scale a pod vertically. Instead, it made more sense to create a copy of the Pod if you want to handle the additional load.

However, that required extensive resource optimisation, and if you didn’t tune your Pod appropriately, by providing a proper resource request and limits configuration, you may either end up evicting your pods too often or wasting many useful resources. …

How to Run Highly Available Kafka on Kubernetes

With Helm charts, stateful sets, and dynamic provisioning

Apache Kafka is one of the most popular event-based distributed streaming platforms. LinkedIn first developed it, and technology leaders such as Uber, Netflix, Slack, Coursera, Spotify, and others currently use it.

Though very powerful, Kafka is equally complex and requires a highly available robust platform to run on. Most of the time, engineers struggle to feed and water the Kafka servers, and standing one up and maintaining it is not a piece of cake.

With microservices in vogue and most companies adopting distributed computing, standing up Kafka as the core messaging backbone has its advantages. …

Save Up to 50% of Your Kubernetes Costs With Preemptible Instances

Running Kubernetes Ingress controllers on preemptible instances on the Google Kubernetes Engine

Containers have come a long way, and Kubernetes isn’t just changing the technology landscape — but also the organisational mindset. With more and more companies moving towards cloud-native technologies, the demand for containers and Kubernetes is ever-increasing.

Kubernetes runs on servers, and servers can either be physical or virtual. With cloud taking a prominent role in the current IT landscape, it’s become much easier to implement near-infinite scaling and to cost optimise your workloads.

Gone are the days when servers were bought in advance, provisioned in racks, and maintained manually. With the cloud, you can spin up and spin down a virtual machine in minutes and pay only for the infrastructure you provision. …

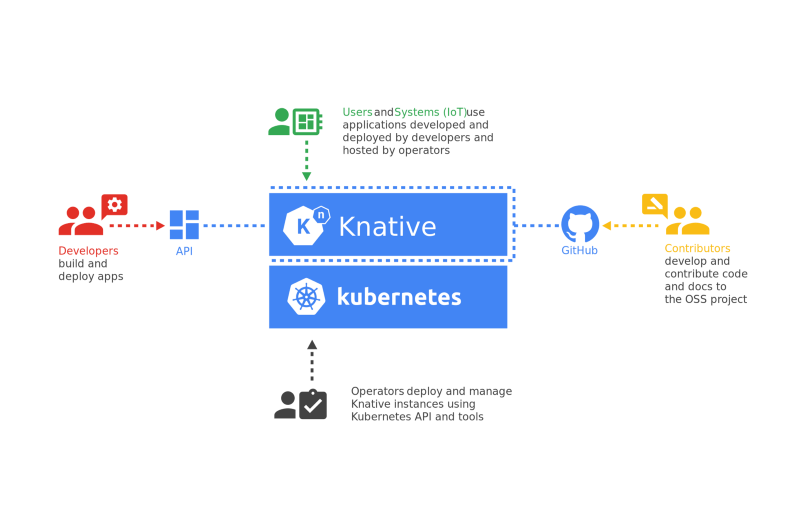

Go Serverless on Kubernetes With Knative

How Knative is the best of both worlds

If you’re already using Kubernetes, you’ve probably heard about serverless. While both platforms are scalable, serverless goes the extra mile by providing developers with running code without worrying about infrastructure and saves on infra costs by virtually scaling your application instances from zero.

Kubernetes, on the other hand, provides its advantages with zero limitations, following a traditional hosting model and advanced traffic management techniques that help you do things - like blue-green deployments and A/B testing.

Knative is an attempt to create the best of the two worlds. As an open-source cloud-native platform, it enables you to run your serverless workloads on Kubernetes, providing all Kubernetes capabilities, plus the simplicity and flexibility of serverless. ...

Measuring Site Reliability

Demystifying SLO, SLI, SLA, and Error Budgets

We have all been in the Dev vs. Ops world where the Dev and Ops teams had different objectives, rules, and priorities. Most of the time, they opposed each other because one’s interest was the other’s problem.

Now we have DevOps. In the words of Andrew Shafer and Patrick Debois, it is “a software engineering culture and practice, that aims at unifying software development and software operation.”

Site reliability engineering implements DevOps by fostering shared ownership, applying the same tooling and techniques to never fail the same way twice while accepting failures. ...

4 Reasons to Use Kubernetes in the Serverless Era

Is serverless a replacement for Kubernetes?

Serverless is cool and has taken the tech world by the storm. It is evolving quite a bit and growing at a tremendous pace. The reasons for its success are its developer-friendliness, ease of running microservices, and lower infrastructure overhead.

Serverless, or FaaS (Functions as a service), is a technology that helps you run code within dynamically managed abstracted servers. The cloud provider provides a provision to run your stateless event-triggered system without worrying about the underlying infra.

Some of the popular serverless offerings are AWS Lamba, Google Cloud Functions, and Azure Functions.

Built for scalability, it allows multiple parallel invocations of your functions, and you pay for the number of executions instead of allocated resources. ...

Tips for Rightsizing Your Kubernetes Cluster

A few large nodes or many small nodes?

Managing a Kubernetes cluster is not a one-size-fits-all problem. There are many ways to rightsize your cluster, and it is vital to design your application for reliability and robustness.

As site-reliability and DevOps engineers, you often need to understand the requirements for the applications you will be running on your cluster and then consider various factors while designing it.

Choosing the correct node size is critical when building applications for scale. ...

Designing Highly Available Container Applications Kubernetes

With pod disruption budgets and anti-affinity

A multi-node Kubernetes cluster is highly available by design. However, that does not mean that your container applications would not be disrupted if something terrible happened with your cluster.

Kubernetes allocates a pod to a node based on several factors, such as resource availability, node availability, taints, tolerations, and affinity and anti-affinity rules. In the default setup, Kubernetes can schedule a pod on any node that meets these constraints.

Kubernetes does not try for the high availability of your applications by default. If you spin up three replicas of your pod, it will not necessarily spread them across all worker nodes. ...

My Experience With the CNCF Certified Kubernetes Administrator Exam

Tips for cracking the Certified Kubernetes Administrator Exam

I have recently completed the Certified Kubernetes Administrator exam and got a few pings on LinkedIn to share my experience, and therefore, I decided to write this blog down.

Kubernetes has gained a lot of traction in recent times, and a great way of showcasing your skills is to get certified. The Linux Foundation’s Certified Kubernetes Administrator is one of the most prestigious certifications you can aim for.

When I say prestigious, I mean it. One good reason for that is that the exam is entirely hands-on. There are no multiple-choice questions and nothing that you need to memorise.

It is therefore fulfilling, and if you love solving problems, you will undoubtedly enjoy the experience to the fullest. ...

Awesome Kubernetes Command-Line Hacks

Tips for you to kubectl like a pro

Kubernetes administrators spend most of their time keying kubectl commands and writing YAML manifest files. However, what separates an experienced Kubernetes admin from a rookie is the way they use kubectl to their advantage.

Here are some tips for saving valuable time and making the most out of kubectl.

Use Aliases

Every experienced Sysadmin makes use of aliases. That helps them save valuable time typing commands. For example, instead of typing ls -l, most sysadmins use ll . Similarly, for Kubernetes, you can make use of the following helpful aliases.

k for kubectl

Use k for kubectl. Why type a seven-letter word if you can do the same thing with one letter? ...

Choose the Right Kubernetes Hosting Solution

Things to consider while selecting a Kubernetes platform

The container war is over, and Kubernetes is the clear winner! When it is about running your containers, the clear choice is Kubernetes, and there are no second thoughts about it. But what people don’t know about is that Kubernetes is a complex beast that needs taming.

Therefore, before you make that decision to install, run, and manage Kubernetes on your own, think twice. ...

Secure Your Kubernetes Cluster With Pod Security Policies

Pod security best practices with a hands-exercise

Kubernetes security has always been a source of great interest among system architects. You still have to compromise between administrative control and developer flexibility and velocity to ensure you don’t leave any holes in your cluster.

There are various areas you need to consider to enable security within your cluster. Typically, cyber-criminals look at ways of taking control of the host worker node by hacking into a Kubernetes application. They can then use that opportunity to shut down your cluster or exploit it for illegal activities.

One way of preventing such control is by enforcing proper pod security policies within your Cluster, without impacting development velocity and adding admin overhead. ...

Monitor Your Kubernetes Cluster With Prometheus and Grafana

Using Helm to set up Prometheus and Grafana your Kubernetes cluster

Prometheus and Grafana are some of the most popular monitoring solutions for Kubernetes, and they now come as default in most managed clusters such as GKE.

These tools have recently graduated from the Cloud Native Computing Foundation, which means that they are ready for production use and are first-class citizens among open-source monitoring tools.

With Helm, installing and managing Prometheus and Grafana on your Kubernetes cluster has become much more straightforward. It not only provides you with a hardened, well-tested setup but also provides a lot of preconfigured dashboards to get you started right away.

It also gives you the flexibility to adapt both tools according to your use case, which is a plus. While Prometheus offers the core monitoring capability of collecting and receiving logs, indexing them, and optimising storage, Grafana provides a means for visualising the metrics in graphical dashboards. ...

How to Save Money Google Cloud Platform

Use Cloud Scheduler to start and stop Compute Engine Instances automatically

Google Cloud Platform is one of the fastest-growing cloud platforms, maintaining a steady position at a number three, behind AWS and Azure. It prides itself for its network quality and the edge on data science and engineering.

Its Kubernetes engine is one of the most mature in the market. And because Kubernetes was Google’s baby, it’s more versatile than Azure’s AKS or AWS’s ECS.

Compute Instances is one of the most competitive IaaS solutions that Google Cloud offers. With sustained used discounts, it works best for companies running pay as you go instances, rather than other cloud providers.

Azure is cheaper in the IaaS segment when you use dedicated instances, but that creates vendor lock-in, something that most organisations avoid. A multi-cloud model is the most suitable tactic - most companies are adapting to the times, where cost saving and optimisation are the highest priorities. ...

Managing Multiple Environments in Terraform

How to use Terraform workspaces to manage multiple states

Terraform has revolutionised the way we look at infrastructure, and with Cloud, it is a stepping stone toward “everything as code.” That is a quantum leap in the history of computing where everything - including hardware and operating systems - is virtualised and can be defined as code.

Terraform has simplified the lives of infrastructure architects, admins, and organisations alike, and it helps in building a computing infrastructure that is ever-changing as well as more scalable and elastic than ever before.

Terraform uses a declarative, high-level, and immutable set of code to define infrastructure. Infrastructure admins would typically just declare what infrastructure they would like to have without worrying about the internal API calls. ...

Effective Ways of Managing Your Terraform State

Collaboration in your team with remote-state back ends

Terraform is one of the most popular infrastructure as code (IaC) tools available. It isn’t only one of the most active open-source projects, but it’s also cutting edge to the point that whenever AWS releases a new service, Terraform has a resource ready even before AWS’s CloudFormation.

Terraform is a declarative IaC software. That means admins declare what infrastructure they want, instead of worrying about the nitty-gritty of writing scripts to provision them. That makes Terraform extremely simple to learn and manage.

It’s incredibly flexible and supports multiple cloud providers. It’s extendable to the point that any cloud provider can create its provider plugins and release it to its users. The users can then use HashiCorp Configuration Language (HCL) scripts for templating their infrastructure deployments and automating infrastructure like never before. ...

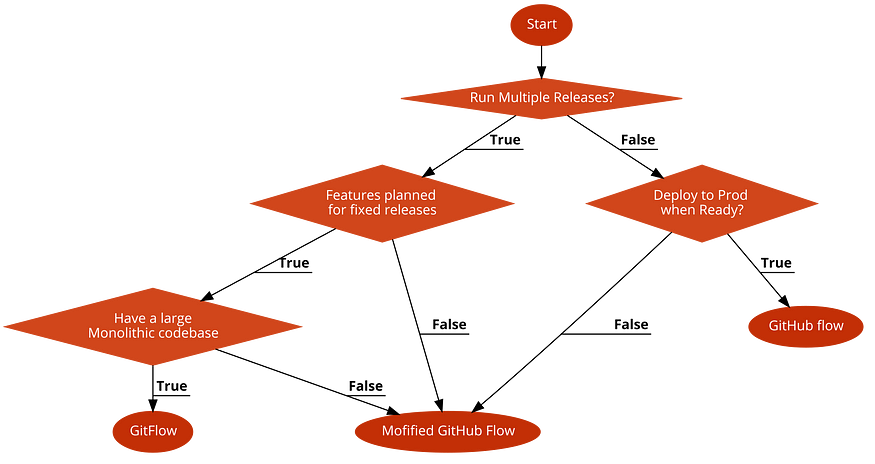

Choosing the Right Git Branching Strategy for Your Team

Analysis of Multiple Branching Strategies with a Flowchart

Git is the most popular version control and source code management tool currently available in the market. It has revolutionised the way we looked at version control with its distributed repository structure that helps developers detach and work on their local repo and attach back to push changes to the remote repo. Git maintains the same version control within the local repo as it keeps in the remote, as the local repo is just a clone of the remote.

Every organisation using Git have some form of Branching Strategy if they work in a team and deliver useful software. There is no right branching strategy, and it has always been a point of contention between developers and industry experts about which one is the best. ...

Monitor your Kubernetes Resources With kubewatch

Watch Kubernetes events, and send notifications to Slack

Kubernetes is a powerful container orchestrator that provides you with many features to manage your application workloads. The sheer flexibility of Kubernetes have further popularised containers, and it’s now a standard in most setups.

Though Kubernetes is feature-rich, it doesn’t provide an out-of-the-box monitoring solution. You’d need to install monitoring tools such as Prometheus or Datadog on top of your cluster.

Kubewatch is an open-source Kubernetes watcher written in Go and developed by Bitnami Labs. ...

Kubernetes Services over HTTPS With Istio’s Secure Gateways

Expose your microservices over TLS to the external world

Istio provides you with many features that help you connect, secure, control and observe your microservices. It gives Kubernetes much control on top of what it’s generally capable of. Istio gateways are a powerful resource that allow you to define entry points into your service mesh from the external world.

In the previous article, we exposed the BookInfo application to the HTTP protocol. ...

Encrypting Kubernetes Secrets With Sealed Secrets

How to store your Kubernetes secrets in Git

GitOps is a way of managing all your configurations through Git. It allows teams to version and manage environment configuration and infrastructure through declarative code.

While Kubernetes allows teams to manage their container workloads using resource manifests, storing Kubernetes Secrets in a Git repository has always been a challenge.

Kubernetes Secrets are resources that help you store sensitive information, such as passwords, keys, certificates, OAuth tokens, and SSH keys.

It decouples secret management and utilisation. While admins can create secrets, developers can simply refer to the Secret resource in their manifests instead of hardcoding the password within their pod definition.

That all sounds good, but the problem with Kubernetes secrets is they store sensitive information as a base64 string. ...

How to Harden Your Containers With Distroless Docker Images

Use distroless images to secure your containers Kubernetes

Containers have changed the way we look at technology infrastructure. It is a quantum leap in the way we run our applications. Container orchestration, along with the Cloud, provides us with a capability of seamless expansion on a near-infinite scale.

Containers, by definition, are supposed to contain your application and its runtime dependencies. However, in reality, they contain much more than that. A standard container base image contains a package manager, shells, and other programs that you would find in a standard Linux distribution.

While all these aspects are necessary for building container images, they need not form the part of the image. For example, once you’ve installed the packages, you no longer need apt within the container. ...

How to Harden Your Kubernetes Cluster With Kube Bench

Comprehensive CIS benchmark testing for your Kubernetes cluster

Kubernetes is no doubt the most popular container orchestration platform. Its success is partly due to the flexibility and the number of features it offers. Though an open-source tool, it has a huge developer base from leading tech companies on the planet, including Google.

Kubernetes security has been a critical talking point in recent times. Because of the growing interest in Kubernetes and the fact that more and more organisations are adopting it to run their production applications, cybercriminals are looking at ways of exploiting some common misconfiguration of Kubernetes.

Configuring Kubernetes security is a task. There are various ways of configuring Kubernetes, and it provides a great deal of flexibility to allow organisations to adapt it to their own security needs. However, with time some standards and best practices in Kubernetes security have evolved. ...

Demystifying DevOps

DevOps is no longer an option — either you adopt it or you lose

DevOps has been here for a while and taken the entire tech industry by storm. There was a time when there was much confusion about what DevOps is, what problem it solves, and whether it’s really needed.

It seems to have settled now, and more than 90% of the tech industry have either adopted DevOps or are on the journey of utilising it. DevOps is no longer an option: either you embrace it or you lose the competition.

While most tech companies are adopting DevOps, there are a multitude of developments going on. ...

Locality-Based Load Balancing in Kubernetes Using Istio

Route requests within your service mesh using geographic location to improve performance and save money

Istio is one of the most feature-rich and robust service meshes for Kubernetes on the market. It is an open-source tool developed by Google, Lyft, and IBM and is quickly gaining popularity.

One of its significant features is traffic management. Istio provides a Layer 7 Proxy that helps you route traffic on multiple factors, such as HTTP headers, source IP, URL path, and hostname.

Organisations that have a global presence often run a high-scale global service mesh that involves microservices spread across the globe. Microservices need to interact with each other to provide complete functionality to customers. ...

How to Validate Your Kubernetes Cluster With Sonobuoy

Run comprehensive conformance testing for your Kubernetes cluster

Kubernetes is the de facto leader in container orchestration. As Kubernetes is an open-source, robust, and flexible platform, there are many implementations of it available in the market.

You can host cloud-native managed Kubernetes clusters (such as GKE, AKS, and ECS), run an on-premise installation with kubeadm, or set up Kubernetes the hard way. There are multiple ways of hosting Kubernetes, and each has its use cases.

With time, specific standards have evolved in the industry, and most setups need to conform to them for optimal performance and security.

As Kubernetes has multiple moving parts, it baffles most system administrators, and most of them wonder whether their configuration is correct and set up the way it should be. ...

How to Build Containers in a Kubernetes Cluster with Kaniko

Automate container builds within K8s without a Docker daemon

Traditionally, organisations have built Docker images outside the Kubernetes cluster. However, with more and more companies adopting Kubernetes and the demand for virtual machines decreasing day by day, it makes sense to run your continuous integration builds within the Kubernetes cluster.

Building Docker images within a container is a security challenge because the container needs access to the worker node file system to connect with the Docker daemon.

You also need to run your container in privileged mode. That practice isn’t recommended as it opens up your nodes to numerous security threats. ...

How to Continuously Deliver Kubernetes Applications With Flux CD

GitOps for your Kubernetes workloads

Flux CD is a continuous delivery tool that is quickly gaining popularity. Weaveworks initially developed the project, and they open-sourced it to the Cloud Native Computing Foundation.

The reasons for its success are that it is Kubernetes-aware and straightforward to set up. The most promising feature it delivers is that it allows teams to manage their Kubernetes deployments declaratively.

Flux CD synchronises the Kubernetes manifests stored in the source code repository with the Kubernetes cluster through periodically polling the repository, so teams don’t need to worry about running kubectl commands and monitoring the environment to see if they have deployed the right workloads. ...

Istio Service Mesh Multi-Cluster Kubernetes Environment

Manage microservices running multiple Kubernetes clusters in a single service mesh

Imagine you work for a typical enterprise, with multiple teams working together, delivering separate pieces of software that make up a single application. Your teams follow a microservices architecture and have a widespread infrastructure made of multiple Kubernetes clusters.

As the microservices spread across multiple clusters, you need to architect a solution to manage all microservices centrally. Fortunately, you’re using Istio, and providing this solution is just another configuration change.

A service mesh technology like Istio helps you securely discover and connect microservices spread across multiple clusters and environments. ...

Traffic Mirroring in Kubernetes Using Istio

How to run robust operational acceptance tests for your Kubernetes microservices

Having multiple versions of your application running at the same time gives you a fair amount of flexibility. It enables you to switch over and switch back traffic between various versions as required. It allows you to do canary releases, A/B testing, and controlled rollouts to production.

With the advent of microservices and container orchestration platforms like Kubernetes to support it, it has become the new norm. With Istio, you can create a robust deployment and release strategy through traffic mirroring.

This article is a follow up to “Kubernetes Services over HTTPS With Istio’s Secure Gateways.” ...

How to Authorize Non-Kubernetes Clients With Istio Your K8s Cluster

Use JSON web tokens to authorize clients to interact with your Kubernetes microservices using Istio

Istio is one of the most desired Kubernetes aware-service mesh technologies that grants you immense power if you host microservices on Kubernetes.

In my last article, “Enable Access Control Between Your Kubernetes Workloads Using Istio,” we discussed how to use Istio to manage access between Kubernetes microservices.

That works well for internal communication. However, most use cases require you authorise non-Kubernetes clients to connect with your Kubernetes workloads - for example, if you expose APIs for third parties to integrate with.

Istio furnishes this capability through its Layer 7 Envoy proxies and utilises JSON Web Tokens (JWT) for authorisation. ...

Enable Access Control Between Your Kubernetes Workloads Using Istio

A guide to Istio authorization between your microservices within Kubernetes

Istio is one of the most popular service mesh technologies that you can run over Kubernetes to control your microservices effectively. Apart from allowing traffic management and visualization, Istio also provides a lot of fine-grained layer 7 security features for your Kubernetes workloads.

While you can use the Kubernetes network policy to build a layer 3 firewall, it does not provide you with advanced security that is used by most modern firewalls and intrusion detection software.

Istio fills this gap by providing granular control for your workloads. ...

Enable Mutual TLS Authentication Between Your Kubernetes Workloads Using Istio

A guide to Istio authentication and mutual TLS between your microservices Kubernetes

In software engineering, access control generally consists of two parts, authentication and authorisation. While authentication refers to understanding whether the requestor is what it claims to be, authorisation refers to specifying what actions the requestor is allowed to perform.

This article is a follow up to “How to Harden Your Microservices on Kubernetes Using Istio”. Today, let’s discuss how to enable mutual TLS authentication between your Kubernetes microservices using Istio.

Prerequisites

This article assumes that you have knowledge of Kubernetes and Microservices and are aware of Istio. For an introduction to Istio, I recommend you check out How to Manage Microservices on Kubernetes With Istio. ...

How to Harden Your Microservices Kubernetes Using Istio

How Istio implements security for your microservices

Running microservices in production provides many benefits. Some of them are independent scalability, agility, the reduced scope of change, frequent deployments, and reusability. However, they come with their own set of challenges.

With a monolith, the idea of security revolves around securing a single application. However, a typical enterprise-grade microservice application may contain hundreds of microservices interacting with each other.

Kubernetes provides an excellent platform for hosting and orchestrating your microservices. However, all interactions between the microservices are insecure by default. They communicate on plaintext HTTP, and it might not be enough to satisfy your security requirements.

To apply the same principles on microservices that you would use on a typical enterprise monolith, you need to ensure the...

How to Visualise Your Istio Service Mesh Kubernetes

Use Prometheus and Grafana to visualise the metrics of your microservices

If you’re running Istio to manage your microservices within Kubernetes, collecting and visualising your metrics is one of the key features it provides. It gives you a lot of control and power over your mesh and allows you to understand your microservices better.

It not only provides your operations team with useful insights to troubleshoot issues but also provides your Security team with valuable data. That helps them do things like hooking your mesh with intrusion-detection software to secure your application further.

This story is a follow up to “How to Use Istio to Inject Faults to Troubleshoot Microservices in Kubernetes.” Today, let’s discuss collecting and visualising the Istio service mesh using Prometheus and Grafana. ...

How to Use Istio to Inject Faults to Troubleshoot Microservices in Kubernetes

Improve your microservices running Kubernetes

Imagine you’ve deployed the reviews-v2 microservice into production, and you have an issue. Users are complaining they can’t see the reviews in your application intermittently.

You’ve run a thorough investigation in your development environment, but you’re not able to find what the problem is.

The only way to investigate the problem is by looking at the traffic flowing through your production services. Someone from your operations team notice timeouts in the chain, and you need to investigate further.

This story is a follow-up to “Locality-Based Load Balancing in Kubernetes Using Istio.” Today, let’s discuss fault injection and troubleshooting.

What’s Fault Injection?

Fault injection, in the context of Istio, is a mechanism by which we can purposefully inject some issues within our mesh to mimic how our application would behave in case it encounter such problems. ...

How to Manage Traffic Using Istio Kubernetes

Insights into request routing, traffic splitting and user identity-based routing using Istio Kubernetes

Traffic management is one of the core features of Istio. If you are using Istio to manage your microservices on Kubernetes, you can have fine-grained control over how they interact with each other. That will also help you define how the traffic flows through your service mesh.

This story is a follow-up to Getting Started With Istio on Kubernetes. Today, let’s discuss traffic management.

In the last article, we installed Istio on our Kubernetes cluster and deployed a sample Book Info application on it. ...

Getting Started With Istio Kubernetes

Install and configure Istio within your Kubernetes cluster

Istio is by far the most popular service mesh that integrates with Kubernetes very well. For a microservices architecture, Istio is not only useful to have but a necessity. This story is a follow-up to How Istio Works Behind the Scenes on Kubernetes. Today, let’s discuss setting up Istio in your Kubernetes cluster.

Prerequisites

You need to have a running Kubernetes cluster to install Istio. If you are running on a cloud, a managed service like Google Kubernetes Engine would be a perfect fit, as it allows automatic sidecar injection.

I am going to discuss how to install Istio in the cloud using GKE. However, there are only minor differences if you are running it within your self-hosted or on-premise Kubernetes cluster. ...

How Istio Works Behind the Scenes Kubernetes

Insights into Istio architecture and how its various moving parts manage microservices in Kubernetes

If you’re doing microservices on Kubernetes, then a service mesh like Istio can work wonders for you. This article is a follow up on “How to Manage Microservices on Kubernetes With Istio.” Today, let’s discuss Istio architecture.

Istio helps you manage microservices through two major components:

Data Plane. These are the sidecar Envoy proxies Istio injects into your microservices. These do the actual routing between your services and also gather telemetry data.

Control Plane. This is the component that tells the data plane how to route traffic. It also stores and manages the configuration and helps administrators interact with the sidecar proxy and control the Istio service mesh. ...

How to CI/CD Google Cloud Platform

Using Cloud Build, Google Container Registry, and Cloud Run to continuously build and deploy a simple Java application

Google Cloud Platform is one of the leading cloud providers in the public cloud market. It provides a host of managed services, and if you are running exclusively on Google Cloud, it makes sense to use the managed CI/CD tools that Google Cloud provides.

A typical Continuous Integration & Deployment setup on Google Cloud Platform looks like the below.

Image for post

Google Cloud CI/CD

Developer checks in the source code to a Version Control system such as GitHub

GitHub triggers a post-commit hook to Cloud Build.

Cloud Build builds the container image and pushes to Container Registry.

Cloud Build then notifies Cloud Run to redeploy

Cloud Run pulls the latest image from the Container Registry and runs it. ...

How Corporate Parasites thrive in the workplace

Ways to identify and weed out Perception Management from your organisation

In my initial years as a graduate techie, straight out of the university, I was very excited when I joined my dream tech firm. I was finally going to do something interesting, and there was a lot to learn and do. As most freshers have the typical spark and want to try out stuff, naturally I had the urge to do things quickly and make complicated things seem manageable.

Honestly, that wasn’t to gain brownie points but for my internal satisfaction and my wish to learn more. I thought that I would reap the rewards for my work. ...

How to Manage Microservices Kubernetes With Istio

How to implement DevSecOps in a microservices architecture with a service mesh

Containers and container orchestration platforms like Kubernetes have simplified the way we run microservices.

Container technology helped popularize the concept as it made it possible to run and scale different components of the applications in self-contained units with their independent runtimes.

While a microservices architecture enables faster delivery by reducing the scope of change to smaller parts, improving system resilience, simplifying testing, and scaling parts of the application independently, implementing it has its challenges.

Microservices are a management and security nightmare as instead of having one monolithic application, you now have multiple moving parts, each catering to specific functionality.

An extensive app can utilize hundreds of microservices interacting with each other and soon you may find things are overwhelming. The main questions your security and operations teams may ask you...

How to Scale Kubernetes Applications Using Custom Metrics

Scale your containers using custom Stackdriver metrics that are important to your business

If you look at the most popular features of Kubernetes, chances are that autoscaling will top the list. Kubernetes provides a resource called “HorizontalPodAutoscaler,” which is a built-in feature to scale your containers based on multiple factors, such as resource utilisation (CPU and memory), network utilisation, or traffic within the pods. If your cluster is nearing the resource limit and you need to add more nodes in the cluster, then managed Kubernetes services such as GKE provide Kubernetes Cluster Autoscaler out of the box.

Imagine you have launched an app and you naturally started small, as you did not anticipate much demand. However, a social media post gets your application to go viral and suddenly people realise how cool it is. Now everyone wants to use your app and there is a massive surge in traffic! You get worried because you don’t know whether your application can take on the additional load. ...

How to Secure Kubernetes Using Network Policies

An illustrated guide to Kubernetes network policies

With more and more organisations adopting Kubernetes and running it in production, it is a necessity to understand the core of it and secure it appropriately. Kubernetes changes the traditional concept of running a separate virtual machine for every application and instead allows you to forget about the underlying infrastructure and just deploy pods in a cluster of generic nodes. That not only simplifies the architecture but also makes managing infrastructure easy.

Kubernetes is an open-source container orchestration platform, and the code base is available online on GitHub. While this enables contribution from the community, it also provides hackers with an opportunity to find loopholes and prepare for an attack. Most automation tools that set up Kubernetes cater to a variety of users, and therefore they do not enforce all security by default. ...

How to Discover non-Kubernetes applications using Kubernetes Service Discovery

How to use Ingress Resources to discover Non-Kubernetes services using NGINX and BIND DNS

Kubernetes provides an excellent inbuilt service discovery through kubernetes services and employs the CoreDNS to resolve services internally within the Kubernetes cluster. ...

How to Secure Kubernetes the Easy Way

How to use Terraform and Kubeadm to bootstrap your Kubernetes cluster

Kubernetes has become a standard for running and managing container-based applications with more and more organisations moving towards and adopting it within their landscape. ...

How to utilise X509 Client Certificates & RBAC to secure Kubernetes

How We Effectively Managed Access to Our Kubernetes Cluster

In most organisations, adopting Kubernetes starts with developers experimenting and then running a Proof of Concept. They then spread the word, and decision-makers start getting interested and see the value. We had a similar path to our Kubernetes journey. We started with a Proof of Concept for our small five-member development team, ran a pilot with a little six-node Kubernetes cluster, and soon the management became interested in the platform and wanted to adopt it across.

Well, doing a Proof of Concept is one thing, and running Kubernetes for a sizeable multi-discipline organisation is another beast. Managing Kubernetes within the small group wasn’t tricky, and we started with sharing the admin.conf file. This file gives root access to anyone using the cluster. Therefore, this was not going to work with multiple teams working separately. We needed to do some serious thinking regarding organising, controlling access, and securing the cluster. If you don’t secure your Kubernetes cluster correctly, terrible things may happen, and it can not only hamper a company financially but also result in a loss of reputation and potential lawsuits no admin would want. ...

How to Create a Highly Available NGINX Load Balancer Google Cloud Platform

Highly Available NGINX Load Balancer Google Cloud using Pacemaker, Corosync and Static IPs CentOS 7

With the advent of virtualised cloud-based infrastructure, more and more organisations are migrating to the cloud. There are a lot of advantages in doing so, but near-infinite scalability, high availability, cost reduction, and performance improvement are significant drivers.

Most scalable and highly available applications run behind some form of a load balancer. Google Cloud Platform, which is one of the leading cloud providers, enables multiple types of load balancing as a managed service for your needs. If you want to know more about it, then please check out https://cloud.google.com/load-balancing/docs/choosing-load-balancer.

Google Cloud also provides a great flowchart to choose between Load Balancers for your specific requirements. ...

How to Secure Kubernetes the Hard Way

An adaptation of Kelsy Hightower’s “Kubernetes the Hard Way” using Terraform to create a hardened cluster CentOS running Docker

Kubernetes is open-source, robust, and one of the most popular container orchestration platforms available in the market. However, because of its complexity, not everyone can secure it appropriately.

Experienced system administrators also struggle with its multiple moving parts and numerous settings (which they built in to provide flexibility).

Therefore, it might be a reason why you might end up in a disaster. ...

How to Harden Your Kubernetes Cluster for Production

Best practices for securing your Kubernetes cluster in production

Kubernetes has changed the way organizations deploy and run their applications, and it has created a significant shift in mindsets. While it has already gained a lot of popularity and more and more organizations are embracing the change, running Kubernetes in production requires care.

Although Kubernetes is open source and does it have its share of vulnerabilities, making the right architectural decision can prevent a disaster from happening.

You need to have a deep level of understanding of how Kubernetes works and how to enforce the best practices so that you can run a secure, highly available, production-ready Kubernetes cluster.

Although Kubernetes is a robust container orchestration platform, the sheer level of complexity with multiple moving parts overwhelms all administrators. ...

How to Terraform With Jenkins and Slack Google’s Cloud Platform

An illustrated guide to Hashicorp’s Terraform

Terraform is the most popular Infrastructure as Code (IAC) tool provided by Hashicorp. With the advent of cloud and self-serviced infrastructure, a groundbreaking concept called Infrastructure as Code (IaC) emerged.

The reason for this is simple. Before IaC, teams used to build and configure infrastructure manually. Slowly and gradually, the infrastructure grew in size and complexity, leading to inconsistency, which led to multiple issues such as cost, scalability, and availability.

Infrastructure built manually needs to be maintained manually. ...

How We Scaled Jenkins in Less Than a Day

Scale your Jenkins agents using Kubernetes

If you’re alive and are an engineer, then chances are that you’re already using Jenkins - or have at least heard about it.

Jenkins is the most popular open-source continuous integration and continuous delivery (CI/CD) tool in the market. The reason for its popularity? Solid backing by organisations like CloudBees, excellent community support, thousands of plugins with a huge developer base, and the sheer simplicity of its setup and use.

This allows organisations to hook up Jenkins with popular version-control tools such as Git, Subversion, and Mercurial; to integrate with code-analysis software such as SonarQube and Fortify; to run Maven and Gradle builds; to execute JUnit and Selenium tests; and much more. ...

Don’t Do Agile, Be Agile

Why Scrum is not the silver bullet

If your organisation didn’t lock itself up in the 1990s, the chances are that you follow Agile. For most people, Agile means Scrum. In many job interviews with prospective candidates, I always hear, “We follow Scrum, and therefore we are Agile.” Well, this is not always true.

Being Agile is a behavioural trait rather than a method that people follow. Yes, Agile methodologies like Scrum do help teams be more Agile, but doing Scrum does not mean you are Agile. Agility comes from a simple fact: how quickly you can adapt to changing business needs and requirements. ...

How to Helm With Sonatype Nexus

Using Sonatype Nexus as a Helm repository for CI/CD

Helm is the first and the most popular package manager for Kubernetes. It allows DevOps teams to version, distribute, and manage Kubernetes applications.

Although one can live with standard kubectl commands and Kubernetes manifest YAML files, when organisations work on microservice architecture - with hundreds of containers interacting with each other - it becomes a necessity to version and manage the Kubernetes manifests.

Helm is now becoming a standard for managing Kubernetes applications and a necessary skill for anyone working with Kubernetes.

Why Helm?

The obvious question is: Why do we need Helm? Let’s find out.

Helm makes templating applications easy

A Docker image forms a template for a Docker container. You can use one Docker image to create multiple containers from it. ...

Kubernetes For Beginners

Kubernetes, for a more general audience

Kubernetes has been around for a while. It’s the leading-edge platform that’s changed the way we look at information technology today. The project was started by a bunch of Google developers as a way to orchestrate containers, which they open-sourced to the cloud-native computing foundation. Today it is one of the most popular systems and the de facto standard for running your containers.

There are various reasons for that. We’ll look into what Kubernetes is in the first place, why it is so popular, and what problem it solves, in plain and simple words. ...

Demystifying Kubernetes Objects

Understanding the what, the why, and the how

Kubernetes is now the de facto standard for container orchestration. There are many reasons for its popularity. One excellent rationale is the number of features it brings with itself, as a result of which there are a considerable number of Kubernetes objects.

In Kubernetes, there are multiple ways to achieve a result, which also causes a lot of confusion among DevOps professionals. This article seeks to address what, how, and why of some often-used Kubernetes objects.

Understanding how Kubernetes works

Kubernetes uses a simple concept to manage containers. There are master nodes (control plane) which control and orchestrate the container workloads, and the worker nodes where the containers run. ...

Using the Azure Kubernetes Provider in a VM Based K8S Cluster

How to Hook in the Azure Cloud Provider for Persistent Volume Claims and Load Balancers with your K8S cluster

There are multiple ways to set up a kubernetes cluster, and some organisations want to start from scratch instead of using managed Kubernetes services. Since organisations which use Azure as a cloud provider do not have visibility of the master nodes as Azure currently does not offer a private master, some have security constraints in using a managed service such as AKS. ...